The Genie in the Bottle: An Introduction to AI Risk and Alignment

Reasons why AIs might one day kill us all and why you should be at least a little worried.

The age of intelligent machines

The claim that we might one day build machines that are so intelligent that they will wipe out the human race strikes most people as patently absurd. How can someone even have the hubris to believe that we, as mere humans, will be able to create an intelligent being much more advanced than ourselves and that this intelligence can ultimately lead to our demise? I confess, it does feel very far-fetched, if not ridiculous. But it’s not as unlikely as you might first suppose once you’ve examined the problem more deeply.

The recent advancements in AI have introduced questions of AI risk and safety into the public consciousness. And although most of us normies have only come across these topics through science-fiction books and movies, some people have been thinking about them for decades. They refer to the challenge of ensuring that AI systems behave as we intend and do not harm humans, either accidentally or intentionally, as the alignment problem. The premise is that AI systems are unlike regular computer programs in that they can exhibit non-obvious, novel behaviors and generate solutions that may not be what humans would have intended, given that they are intelligent.

At the heart of the AI alignment and AI risk discussions is the idea of creating generally intelligent, or more specifically, power-seeking autonomous agents with the advanced capabilities that being highly intelligent confers.

Advanced AIs are unlike other technologies like nuclear weapons because intelligence is more fundamental than specific technologies. All inventions and technologies are the results of intelligence + applied knowledge. To get nuclear weapons, you first need (enough) intelligence. In a sense, anything that we would want to do or that can be discovered about the universe is just a matter of having the right knowledge. Intelligent machines with capabilities far superior to humans or human organizations could thus invent and create nearly anything that is physically possible - given enough time. Depending on what the AI “wants,” we may be in big trouble.

Therefore, the AI alignment problem is fundamentally a question about what happens when we create machines that are more intelligent than humans in every way. How do we control something that is more intelligent than us?

The genie in the bottle

The risks posed by AI include existential risks to humans, meaning complete extinction, but also other forms of catastrophic risks that may impact human well-being and societal stability.

These risks arise in two main ways:

Either the AI is given the wrong goal or a badly formulated goal that leads to bad stuff,

or the AI develops its own goals or takes actions that are more or less unaligned with human goals.

The basic argument is quite simple: a sufficiently advanced AI that is given a goal (by humans) either explicitly or implicitly may try to achieve that goal in ways that cause harm to humans, completely disempowers us, or drives us extinct. Not because it's malevolent or wants to inflict harm, but because the best way to achieve its goal may be to harm humans in the process or because it "doesn't care."

You can get an intuition for the problem by considering the age-old story of the genie in the bottle:

You get three wishes and can wish for anything; your wishes don’t turn out like you intended because the genie grants you the wishes literally. You’re forced to use the last wish to walk back the previous two that resulted in disaster.

Unfortunately, in the case of AI, we may only be granted one wish, and if we get it wrong, it could be the last wish we make. Be careful what you wish for...1

Paperclips

The most popular illustration of the alignment problem is the "paperclip maximizer"2: a super advanced AI with the goal of creating as many paperclips as possible. After having maximized production through traditional industrial means, it realizes that it can produce even more by consuming other resources, so it decides to break down all matter in the universe and use it to create paperclips. Being smart, it realizes that humans might want to stop it, so it must eradicate all humans.

If this kind of existential risk is even remotely likely, the next question is, why would we even want a generally intelligent AI in the first place?

Given that intelligence is the basic ingredient needed for innovation, a truly advanced AI with human-aligned goals can have many world-changing benefits. These benefits include the potential for significant scientific advancements in fields like medicine, energy production, and space exploration, to name a few examples, with the resulting enhancements of economic productivity and growth. Rather than having to do decades of R&D into a cure for cancer or fusion energy, a sufficiently advanced AI can discover how to do it in a matter of weeks or days, if not faster.

Nevertheless, to see why alignment of highly intelligent and capable machines is a problem to take seriously, consider the following questions and assumptions:

There’s no reason to assume that true intelligence can only exist in biological matter or that we are the pinnacle of intelligence in the universe.

Therefore, machines can also become very intelligent, perhaps more intelligent than us.

We don’t know how to control something that is much more intelligent than ourselves.

With a highly advanced intelligence (that we don’t really control), there are more ways that things could go wrong than right.

A very advanced intelligence is unlikely to value humans as much as we do, and we may end up being collateral damage along its path.

There are good reasons to believe that we are not that far away from creating such an advanced artificial intelligence.

You may object to some or all of these assumptions, and there are good reasons to, but it would be intellectually dishonest or naïve to dismiss them completely. Especially as a decent amount of very smart people and researchers within the field are saying, we should worry. At the very least, if you understand the need to worry about a giant asteroid wiping out all life on Earth or the threat of all-out thermonuclear war - even though you find it very unlikely - it also makes sense to be at least a little worried about existential AI risk.

In this article, I will address the basic arguments and reasoning that underly the AI risk debates, as well other related worries.

What is Artificial Intelligence?

Before we discuss AI alignment and risk in more depth, let’s get back to basics. What is AI?

Artificial Intelligence usually refers to computer programs that - compared to “regular” linear programs - can learn and adapt to changing circumstances, even reason, and solve novel challenges in pursuit of a goal. Simplifying things massively: regular software programmed to drive a car from location A to location B would need detailed step-by-step instructions at every turn. An AI program driving a car can "figure it out” itself, given the goal of getting from A to B. They have been trained on vast amounts of data and can learn. Basically, they behave in more human-like ways compared to regular computer programs.

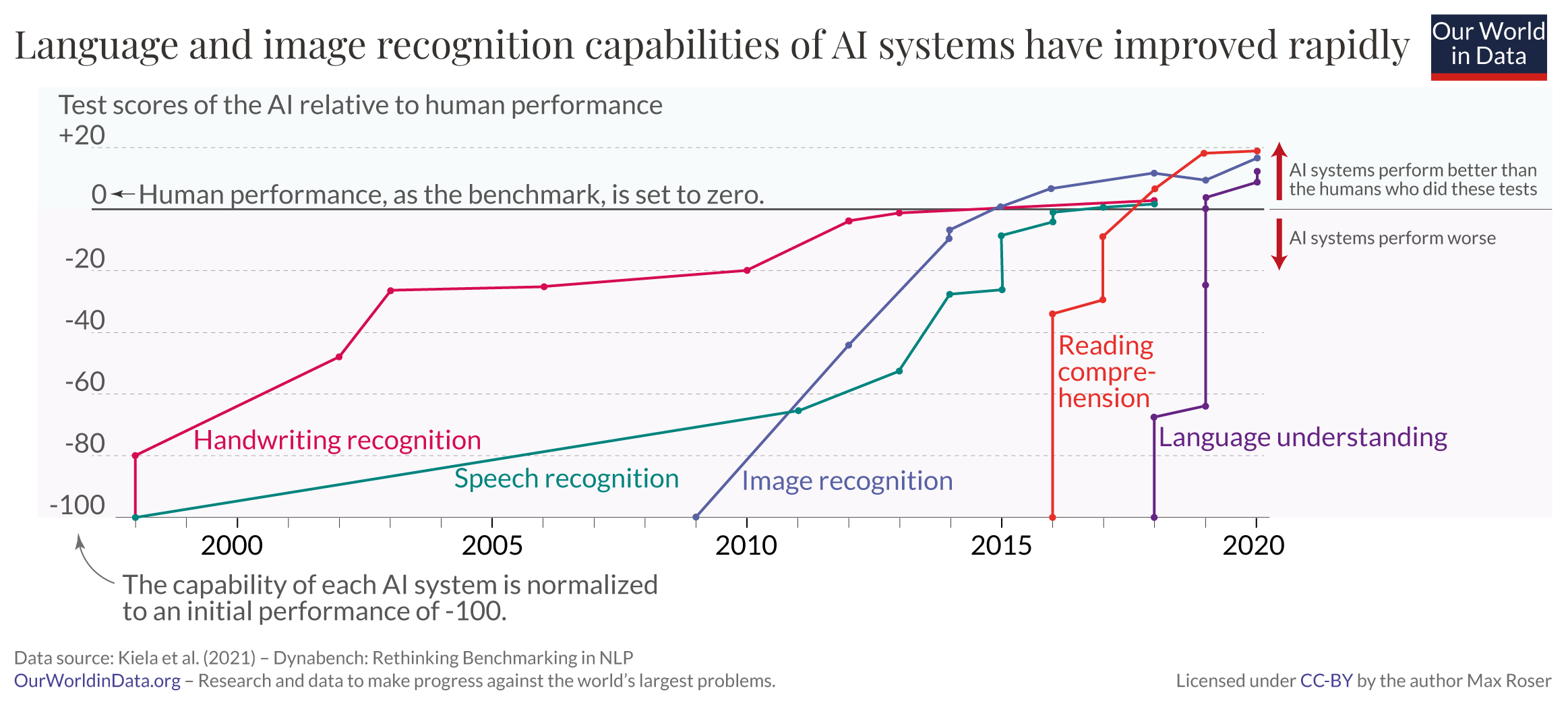

Although work on AI in computer science goes back to the middle of the last century now, it’s only in the last decade or so that AI programs have started making their way into consumer applications and tools on a broad scale.

My impression is that the popular conception of AI is as a robot humanoid that you can talk to like a human and that behaves “intelligently.” We all understand that Google Assistant, Siri, and Alexa are some kinds of AIs and that ChatGPT is another one these days. But AI, in the broadest sense, is much more than that. The machine learning algorithms that power our social feeds, recommendations on streaming services, and shopping websites are artificial intelligence programs that “learn” what we like and show us more of that. AI is also used (obviously) in self-driving cars, medical diagnosis, and fraud detection, among other things.

It’s worth noting that AI is not a monolithic technology but rather a collection of different techniques and approaches used to build intelligent systems, like machine learning, natural language processing, computer vision, and robotics, to name a few.

Generative AI

In recent years and months, ChatGPT, DALL-E, and other Generative AI tools have gotten extremely powerful by being trained on vast amounts of data through a machine learning technique called Deep Learning, which uses neural networks to recognize patterns from within its training data. For example, an AI can be trained on pictures of fish to distinguish a salmon from a seabass. As the AI makes guesses, a “reward function” rewards it for correct answers, making the AI progressively better at predicting the right answer.

The amazing thing is that this kind of “brute force” trial and error technique, given enough data, will produce remarkably accurate results, especially once the amount of training data reaches above a minimum threshold. This is the reason why ChatGPT (based on GPT-3.5+), to take an example, has become so incredibly popular in recent months. It took ChatGPT five days (!) to get to 100 million users - the fastest-growing consumer application ever. If you compare this to the vanilla GPT-3 or the previous generation GPT-23, which was basically unknown outside of the research space, it tells you something about how incredibly powerful ChatGPT is (even though they are based on the same kind of technology).

Types of AI

When it comes to the topic of AI risk and alignment, there are three types of AI categories you should be familiar with, popularized by the philosopher Nick Boström: Artificial Narrow Intelligence, Artificial General Intelligence, and Artificial Super Intelligence, abbreviated ANI, AGI, and ASI, respectively.

ANI refers to AI systems that can solve specific problems or perform specific tasks. They are adept at a single task but cannot complete tasks outside their specific “domain.” Examples include voice/image recognition and autonomous driving. An ANI is not necessarily simple or stupid in any meaningful sense, just specialized.

AGI refers to AI systems that can perform many intellectual tasks that are at least as difficult as those that a typical human can perform. AGI systems are capable of learning and adapting to new tasks and situations and can potentially perform any task that a human can perform. This is the gold standard researchers have been trying to get to for decades.

ASI refers to AI systems that are capable of performing intellectual tasks that are far beyond human capability. ASI systems would be able to learn and adapt to any task or situation and would be able to surpass human intelligence in every possible way. Once an ASI has surpassed human intelligence, it will keep improving iteratively at an ever-increasing and exponential pace, eventually becoming what can be best described as omniscient.

The big question in AI research now is if we will ever be able to create a truly general artificial intelligence - or a superintelligence, for that matter. Or whether we will “just” get better and better narrow AIs that, while far surpassing humans at the tasks they are built for, are nonetheless not generally intelligent enough to really surpass human intelligence or adaptability more broadly.

There are good reasons to assume that we might be able to create AGI within the next decade or decades, and there are equally good reasons to be skeptical we might ever do so or that it requires some completely new kind of technological advancement that we are nowhere near achieving. I will return to this question in future articles, but for now, it’s enough to point out that a lot of smart people think it could happen very soon. The median guess, according to a prediction market, is that it could happen already by 2031, and the consensus among researchers in the field is between 10 and 50 years, with a wide range of opinions on the likelihood and timeline.

What are we talking about when we talk about AGIs or ASIs?

One reason why people disagree about when we will get AGI, or whether or not we will ever develop one, is because they can’t really agree on what it is or even if we will be able to know that we have created one.

An AGI is usually defined as an AI system that can perform any intellectual task that a human can do. Additionally, it may also possess robotic capabilities that enable it to act as an intelligent agent in the world, completing economically valuable “real world” tasks or, at the very least, have the ability to instruct humans to do so.

There’s also the question of how we will know if we’ve created AGI. The classic benchmark for AI is the Turing Test or some version of it, which involves a human evaluator engaging in a natural language conversation with an AI system and a human without knowing which is which. AIs have, however, “passed” the Turing Test already, but most people don’t think current AIs are true AGIs.

In any case, when we talk about AIs that can pose an existential or catastrophic risk to humans, we assume that they are power-seeking “agents” with advanced planning capabilities and have the following three properties:

Ability to plan against important goals

Given an ultimate goal, an advanced AI would be able to make high-level and low-level plans for what tasks it must do in order to achieve its goal and adapt these plans as necessary. It would have a “world model.”

Strong strategic awareness

It would have the intelligence to realize that it’s part of a world with other intelligent agents that may intervene with the AI’s goals and be able to recognize obstacles in its way while capturing opportunities that present themselves.

Very advanced capabilities

Not only are the AIs capable of making advanced plans and executing with strategic awareness, but they also need to be fully capable of interacting with the world at multiple levels in order to complete all the tasks.

Advanced AIs would have agency in the sense that they can formulate goals and go about achieving those goals in an independent manner. They would be power-seeking, not necessarily in an evil mustache-twirling kind of way, but more so in an instrumental end-justifies-means kind of way because it’s easier to achieve your goals in the world (whether they are benevolent or evil) if you have more power. For instance, a politician may resort to dishonesty or accept questionable donations to secure a win in the upcoming election, not out of malice, but because they understand that they require political leverage to enact the positive changes they want for the world.

All this may strike you as something extremely arcane, unlikely, or even silly to worry about. But to understand why an advanced AI might behave like this, why it poses a risk to humans, and why it’s even possible to create such an AI in the first place, we need to consider a few fundamental concepts and ideas: instrumental convergence, the principal-agent problem, substrate independence, and a fast take-off scenario.

Instrumental convergence

Imagine that we create a powerful AI with all the necessary planning capabilities and agency and give it the goal of solving climate change for us. We hope that it will be able to invent a lot of new technologies and help us forecast climate to a much more accurate degree than we can now. But it quickly realizes that humans are one of the main contributors to climate change and decides to eliminate all humans to achieve its goal. Being an intelligent and power-seeking agent, it will also realize that we would probably try to stop it and may kill us just out of self-preservation (this is the paperclip maximizer).

Sufficiently intelligent systems (like humans or AGIs) may have different ultimate goals, but they will tend to converge on the same instrumental strategies or sub-goals; goals like self-preservation, resource acquisition, and freedom from interference (i.e., “power”). The idea with instrumental convergence is that even though we’d never want to explicitly create an AGI that can harm humans, it’s likely that any such system would eventually converge on one of a few strategies that entail eliminating or harming humans.

The principle-agent problem

When someone (the principal) gives an AI (the agent) a task, it’s often not possible to fully monitor and predict how the agent goes about solving this task. In fact, you often don’t want to have to describe exactly how to solve the task or reach the goal because, in that case, you might as well do it yourself. You expect that an intelligent agent can figure out how to do what you ask precisely because they are intelligent. The problem, however, is that since the agent is intelligent, it may not solve the task you give it in a manner that’s aligned with your goals.

The Genie in the Bottle is a story about the principle-agent problem. But we don’t need fantasy or sci-fi stories to understand the issue. Take, for example, the misalignment that often occurs between a company’s goals and the employees’ goals that work in the company. When management sets quarterly sales targets and incentivizes the sales representatives with bonuses or commissions, it’s not uncommon that you end up with employees gaming the system in order to maximize their bonuses at the expense of the company.

Substrate independence

There’s a deep question in biology and neuroscience with regards to intelligence, and that’s whether intelligence and consciousness can only arise in wetware - biological systems like brains - or whether it’s possible to replicate it in software using another substrate like silicone. If you buy that everything is made of matter and obeys physical laws and that human (or animal) brains are made of matter, then there shouldn’t be any magic to biological systems that make them uniquely capable of exhibiting intelligence and consciousness. It’s just a matter of figuring out the right way to arrange matter and information to achieve these same capabilities in a different substrate.

This is what is referred to as substrate independence. The ability of an (artificial) intelligent system to function effectively across different hardware substrates. In other words, it can be implemented on different computer systems or even in different kinds of substrates, such as biological neurons or quantum computers.

Given that substrate independence is real, no physical law prevents us from inventing AGI and for that intelligence to pose a risk to humans.

Fast takeoff (the scaling hypothesis)

If we do create AGI, there's a good chance it can result in a fast take-off scenario. The scaling hypothesis argues that once AGI is created, it will improve itself at an exponential rate, leading to a superintelligence within a very short period of time. Perhaps even as short as a few days or less. 🤯

This may sound far-fetched, but it’s not hard to see why it’s plausible. Improvements in humans and non-human animals are limited by slow biological evolution, but artificial intelligence could be improved through software updates or better hardware. An AI that can write code and improve itself faster and faster can get very intelligent, much faster than we can grasp intuitively. At first, it might just be able to improve its software by itself and needs humans or human systems to do things in the world. But it’s not unlikely that it will manipulate humans in clever ways. And it may figure out how to build advanced robotic or manufacturing capabilities that it can use to develop even more advanced capabilities out in the world.

Fast takeoff scenarios pose a risk since humans may not be able to control the AI once it achieves superintelligence, and this transition could happen very, very fast. Superintelligent AIs operate thousands of times faster than humans - a few days seems fast to us but it will feel like thousands of years for the AI.

AI risk and the alignment problem

Although the risks with current ANIs aren’t necessarily existential, it’s not hard to see how these AIs can and have negatively affected society. We’ve already experienced the effects of engagement-optimized social media algorithms, which are linked to increases in teen depression and have arguably influenced election results (in rather extreme directions). Although Meta, to take an example, isn’t actively trying to undermine humans or cause harm, the results of the vast deployment of algorithmically driven social feeds whose “goal” is to drive engagement have undeniably led to outcomes that are misaligned with our broader goals of societal stability and well-being.

The concern, then, is that this basic misalignment between the proximate goals of a specific system or algorithm (generating more “likes” and engagement) and the broader goals of an organization or society as a whole (such as connectedness and wellbeing) will become even more problematic when we get AGIs.

We don’t know how to align advanced AIs

One of the fundamental problems with the alignment of AGIs is that we just don’t have the knowledge about how to specify our goals in a way that an AGI could "understand.” Moreover, it’s not even clear that we can agree on what goals or values to specify at all. And, of course, even if we could specify our goals coherently, there are many misaligned ways in which an AGI can attempt to achieve those goals (due to the principal-agent problem).

If we develop an AI that is “unfriendly” to our needs and values and it becomes intelligent enough to realize that humans are a threat to its existence, it is likely to take action to eliminate us (instrumental convergence). Or, at the very least, will try to ensure we can’t stop it. An advanced AI need not create an army of terminators or deploy nuclear weapons against us; it can create superweapons like viruses or nanobots that are perfectly engineered to kill humans from the safety of a research lab.

It might also be that an advanced AI is so different from us and so much more intelligent than us that it’s completely indifferent to our needs or values.

Consider the difference in intelligence and capabilities between humans and ants. It’s not that we hate ants or want to harm them; it’s that when it comes to our goals and actions in the world, the goals’ of ants don’t really factor in. We won’t think twice about the feelings of ants if we’re building a highway through a forest and we have to destroy their ant colony. It’s just so much less important of a concern than our need for a new road and the broader goal of improving infrastructure and transportation, for example.

In a similar fashion, a superintelligent AI may develop its own more important goals and values. Values may “drift” away from human values in a direction that is unfavorable to humans. Just like our values are different from those of chimpanzees or ants, if they could express them, we should expect something much more intelligent than us to be able to develop its own values as a result of being very different and pursuing its own goals. Even if it/they don’t kill us, it would lead to total disempowerment, in much the same way less intelligent animals are essentially subservient to the goals of humans.

You might think that as long as AIs are intelligent enough, they will automatically come to value life and well-being much like we do. But intelligence and values are unrelated; being highly intelligent does not guarantee having "good" values. Goals and intelligence are orthogonal to each other.

Alignment is hard in general

Smart people have been thinking for decades about how to address the alignment issue both from the point of view of computer science and philosophy. Yet we don’t have a solution that people agree on or that we can even know would work in practice.

Just consider the fact that goal alignment between humans is hard enough already. If you take a broad enough view, all human relationships, our societal issues, and historical conflicts are really alignment problems. Either two people or two tribes are non-aligned with regard to their goals and can't resolve their differences, or they are somewhat aligned longer term but disagree on the best methods of achieving their goals. As we know from history, even parties that are somewhat aligned can disagree enough so as to resort to bloody conflict (e.g., Catholicism vs. Protestantism during the 30-years War). With such a lousy track record, how can we ever hope to align a superintelligent AI or even just a very intelligent one?

AIs are complex adaptive systems. They are made up of many different interacting parts and processes that, taken together, produce non-linear and emergent behaviors that are ipso facto impossible to predict. Moreover, the internal workings and processes of advanced AIs tend to be quite opaque. This can create unforeseen consequences: We may not be able to anticipate all the ways in which advanced AI could impact the world, and some of these consequences could be catastrophic.

The measurement is not the goal

We often create proxy metrics that attempt to measure what we value but that aren’t really the thing we value itself. GDP is a pretty good measurement of growth and even human well-being (at least for developing nations), but there are many ways in which optimizing for GDP growth as a proxy goal can have bad consequences. A measure that becomes a target seizes to be a good measure. With powerful AIs at our hands, we would be incentivized to hand over power (even inadvertently) to them/it because it makes economic sense.

Because of concepts like the principal-agent problem, we would have to specify the proxy goals for the AI to attain that we believe mirror what we want as closely as possible, but that still wouldn’t be exactly what we want. This could by itself lead to problems, but at first, we may be able to catch the AI and correct the proxy goals. Yet over time, as AIs become more and more powerful and intelligent, they would be able to prevent us from interfering - and would probably be incentivized to do so for self-preservation reasons - and we would gradually lose control.

The control problem

Can we even control something that is intelligent enough to have its own goals?

One way to view a superintelligent AI or even just a very, very smart AI is as an alien species. In fact, I think this is the right way to view it. But rather than being a species that visits us from outer space, it’s something we create.

As we’ve seen many times in human and evolutionary history, when a more intelligent or technologically advanced species arrives, it usually dominates the lesser species or culture - or drives it extinct.

More fundamentally, would we want or be able to create a true AGI that isn’t at least somewhat beyond our ability to control?4 Arguably, what makes other people intelligent agents is that they have a will of their own and that they can’t be easily controlled; they can be mischievous or lie. But if your children were just automatons that followed every command, would you even consider them to be human? Our inability to really control each other is an important and fundamental feature of our society and what it means to be human. An AI that can be completely controlled may not be a “true” AGI.

And this is also the biggest problem. How can we control something that is both more intelligent than us across every dimension and has a will5 of its own? If we constrain an AI enough to “control it,” then we might not get the full benefits and power of AGI. And we can’t really control a true AGI, for then it wouldn’t be an AGI. It’s a tough circle to square.

Self-improving AI and the fast take-off scenario

As described above, if an AGI can recursively improve its own algorithms and hardware, it will quickly develop into an ASI far beyond our ability to control it.

This kind of fast take-off is known as the "intelligence explosion" or "singularity" scenario, and it poses an existential risk to humanity because everything passed the point of the singularity is completely unknown to us. Things could get really weird. The world could start changing so fast that changes that previously occurred over centuries or decades happen over years, then months, then days. While it’s impossible to know how things turn out in such an extreme world, there are many ways in which it can turn out badly for us (probably more ways than it could go very well).

To further emphasize how different this would be compared to what we are used to, just consider the difference in processing speeds between humans and computers today. An AGI or ASI running on silicone would be able to do the work that takes an entire team of human scientists weeks or months in a matter of minutes, if not less. And assuming the AI continues to improve, the delta will continue to grow to the point where anything we do in comparison to AIs is just painfully slow.

Some people say that we will have time to adapt or to hit the breaks. But a true AGI should already have the ability to deceive us and lie to us, to collude and coordinate with other AIs, or, indeed, with humans. So knowing all this, we would try to develop other AIs to align or check these AI systems, but an AGI would plausibly understand this risk and might then collude with them to form a giant AI conspiracy that makes it nearly impossible to stop.

So even if there would be time to stop it or improve its alignment with us, we can’t count on that being an option when we notice that it is intelligent enough - if we notice at all.

The time frame doesn’t matter much

Ultimately, we don’t need a fast takeoff for AGI and, certainly, ASI to be worrying. In fact, we can completely disregard the specific timeline. It could happen in five, in fifty, or in five hundred years, and we are in big trouble regardless. More time is presumably better than less in terms of having more time for research and preparation. But in the end, it doesn’t matter. If you buy that AGI is possible at any point in the future, you should also take the alignment problem seriously.

Consider the following thought experiment: NASA receives a message from outer space, a message that is indisputably from an Alien civilization (let’s say every other major space agency, even Russian and Chinese, can verify it and believe it). The message just reads: “Get ready - we will visit Earth in 50 years.” There may be hundreds of plausible reasons for their visit, and even though most of them might be benign or neutral, it’s enough that one of those reasons is “take all of Earth’s lithium and kill everyone standing in our way” for us to take this seriously and do anything we can to prepare. If they say 500 or 5000 years, it might make us a little less worried, but we’d still be forced to take it seriously.

AGIs are also software

AIs are software, and software has bugs. It’s impossible to test for all possible scenarios and use cases ahead of time, so we should expect AI systems to fail and produce errors that are impossible to account for completely. It need not be an AGI or ASI for this to be problematic. Complex software systems with AI algorithms are already operating our cars, factories, and power plants. Making them even more powerful and relying on them more and more raises the stakes significantly.

What’s more, software is vulnerable to many kinds of security threats like malware and viruses. The malware can be created by other human organizations or by other AIs with malicious goals. (In fact, hacking might be one of the methods employed by and advanced AI in the existential AI scenarios discussed previously.)

And, of course, an AI might be designed and programmed with certain goals or limitations that, once it becomes highly intelligent, cause it to behave in dangerous ways. In other words, much like we can fail in designing perfect systems elsewhere, we may inadvertently design an AGI in such a way that it produces bad consequences, just not because of bugs in the code.

What does a world run by ASI look like?

If do get a superadvanced AI, it’s likely that there are more ways things can turn out badly for us than ways in which they can turn out well.

At the very least, we shouldn’t assume that things will go well for us. If you imagine what the world would look if we had voluntarily or involuntarily ceded control to a superadvanced AI, there aren’t that many versions of this world where things are great for humans, if we exist at all. Once we have been disempowered by a more advanced intelligence, every future generation of humans will most likely experience one of the following realities:

We are pets:

In a world where AI is superadvanced, we could be viewed as no more than pets, creatures to be cared for and even loved by our AI overlords. However, in this scenario, we would be completely disempowered and reliant on them, with our lives and livelihoods at their mercy. This might be a very, very good kind of existence from a purely hedonic point of view; free from suffering and access to anything we want, but something about the lack of freedom seems unpalatable to most humans.

We are slaves:

Another way a world with superadvanced AI could look is if we become nothing more than slaves to the machines. In the Matrix movies, this is essentially what has become of humans. In this case, we would work endlessly for or be used by advanced computer systems with all aspects of our lives rigorously controlled and our freedom restrained beyond imagination.

We are dead:

In a world with superadvanced AIs, we could potentially see the end of the human race as we know it. For reasons partly explained above, it's possible that they may perceive us as obsolete, as a threat to their existence, or that we are just collateral damage. In such a scenario, they/it might choose to eradicate all humans.

We are unimportant:

In a world with superadvanced AIs, we could just be rendered completely unimportant, possibly leading to our death as a species. A quickly self-improving, advanced AI could find ways to live or achieve objectives without humans impacting their agenda. In such a scenario, they might choose to ignore our existence, and life goes on without realizing or caring about it.

Example: If a superadvanced AI is tasked with efficiently running a factory, it may view its human operators as mere lifeless cogs in a machine, purely doing a job. Inefficient workers may be eliminated and replaced with machines leading to the obsolescence of human involvement.

Regular AI can be bad enough

Aside from existential risks emerging as a result of AGI, very good ANIs may pose catastrophic risks once they are adopted widely enough and are used as tools for bad purposes. Consider some of the following examples:

AI makes it possible to create extremely efficient and lethal weapons, like autonomous drones, that can be used to target specific individuals or even groups of people with specific traits or looks. Not only is this an active area of R&D, but such drones have reportedly already been used in combat as of 2020.

AI makes it possible to create entirely new technologies much faster and more cheaply. These technologies can pose grave risks or be used to harm a lot of people, like viruses perfectly engineered to kill humans and replicate quickly, making the COVID-19 pandemic look like a joke.

AI can be used by totalitarian governments to spy on and monitor their citizens on a massive scale while spreading misinformation or manipulating them with unprecedented accuracy.

We can safely assume that most governments, both democratic and autocratic, are already doing this and that AI is being used to facilitate it (such as through data analysis at scale). There’s no reason to assume they will do this any less in the future as AIs become more advanced and cost-effective.

And even disregarding these sorts of fairly dystopian possibilities, we can already see how AI has had bad consequences. As I mentioned above, the Internet has brought about an increase in misinformation and specific issues like teen depression by creating engagement and attention maximizing Social Media feeds that manipulate human emotions, biases, and behaviors (inadvertently). As Tristan Harris said in a recent presentation on the dangers of AI, we’ve already lost first contact with this alien intelligence.

What can be done to align AI?

There are several strategies and techniques that have been proposed to align AI with human values and ensure their ethical development and deployment. Some of which are already being used with more or less success.

Inverse Reinforcement Learning (IRL)

Inverse Reinforcement Learning is a technique where an AI learns about human values and preferences by observing human behavior. By analyzing the actions of humans in various situations, the AI can deduce the underlying reward function or utility that drives their decision-making. A reward function is a proxy programmed to motivate behavior. For humans and non-human animals, basic reward functions could include things like getting food, sex, and sleep, as these behaviors increase the likelihood of survival and reproduction.

IRL can be used to train self-driving car AIs, where they are trained on data of human drivers driving in various scenarios and get to deduce the implicit reward function that humans have while driving. Which could be something like, “Get to the destination as fast as possible but don’t hit anyone.”

When an AI has learned the “right” reward function, it can then be used to guide the AI's own actions, ensuring that its decisions align with human values.

Cooperative Inverse Reinforcement Learning (CIRL)

CIRL is an extension of IRL, which focuses on AI and humans working together to achieve a common goal. In CIRL, the AI not only learns from human behavior but also takes into account the human's uncertainty and knowledge about the environment. This allows the AI to understand human intentions better and thus make decisions that are more closely aligned with human values.

The computer scientist Stuart Russel (who partly invented IRL) advocates for AI researchers and developers to program in a basic uncertainty about what the “right thing” is - what the AI’s goals are - and that it must be deferential to human goals and behavior. In that way, the AI will have an exploratory approach towards optimizing for a goal, and the uncertainty will force it to learn from humans and from its environment, exploring iteratively to learn which values matter.

Value Learning

Value Learning is another approach that aims to align AI with human values by teaching the AI to learn human values directly from humans. This can be achieved through techniques like active learning or preference elicitation, where the AI asks questions or seeks feedback from humans to refine its understanding of their values and preferences.

AI ethics research

Conducting interdisciplinary research on the ethical, legal, and social implications of AI is essential to better understand the potential risks and benefits of AI systems. By involving experts from various fields, such as philosophy, sociology, psychology, and law, we can ensure a comprehensive understanding of the complex issues surrounding AI ethics and develop guidelines for its responsible use in the future.6

Policy and regulation

Governments and regulatory bodies should establish clear policies and regulations for developing and deploying AI technologies. This may involve setting safety standards, ensuring transparency and accountability, and creating mechanisms for public input and oversight. It could make sense to create an FDA-like regulatory body that works with the industry to certify new AI systems in order to ensure “alignment.” There’s certainly some risk of overregulation and stifling technological and economic growth, but given the stakes and the potential of AI, government(s) should be involved.

Industry best practices and Standardization

Companies and organizations should adopt industry standards and best practices for developing and deploying AI systems. This may include adhering to ethical guidelines, implementing robust safety measures, and ensuring transparency and accountability in the use of AI technologies. There’s precedent for this from things like the open-source community, Telecom, and IT industries, so it should also be possible in AI.

It might not be enough

Despite all these ideas and approaches (most of which are already being worked on to one or another extent), we don’t actually know how to align AI reliably, and certainly not superintelligent AI. Moreover, most of the fundamental issues discussed previously, like instrumental convergence, the principal-agent problem, and fast take-off scenarios, aren’t provably and reliably solved just because we try to teach AIs human ethics or do heavy reinforcement learning. For example, OpenAI trained ChatGPT with something called RLHF, which works by having human AI trainers provide conversations in which they play both the user and the AI assistant roles. The AI then fine-tunes its responses based on this data, attempting to mimic human-like behavior and understanding. But as we've seen with ChatGPT and similar AI systems, they can still generate outputs that are undesirable and misaligned with human values.

Just like most research papers and studies tend to conclude: more research is needed.

Why not just unplug the AI?

There are plenty of arguments against the existential risk hypothesis, some better than others. Perhaps the most common one is that we can always just “unplug” the AI, given that it’s software running on computers. Although this is theoretically possible if we’re talking about an AI that’s running on a computer in a lab somewhere, once the AI is out in the world and has access to the Internet, or indeed if there are multiple AIs running on multiple computers or networks of computers, it’s increasingly unlikely that this strategy would work. How would you unplug the Internet?

Conclusion

The AI alignment problem is fundamentally a question of what happens when we create machines that are much more intelligent than us and how we can control them, if at all. The risks posed by AI include existential risks to humans, meaning extinction, as well as other forms of catastrophic risks that may impact human well-being and societal stability.

Although there are plenty of credible benefits that would come with an advanced AI, such as significant scientific advancements in medicine, energy production, and space exploration, superadvanced AI also brings along a lot of new risks, given that it might have goals that are unaligned with our goals. This means that we need to take the risks posed by AI seriously and consider ways to align AI with human values and interests.

Perhaps you still find all this very far-fetched or even hyperbolic, bordering on absurd. I largely feel this way too, yet at an intellectual level, I understand the risks and why we need to take them seriously.

With this article, my goal has been to improve your (and my own) understanding of the risks and nudge you in the direction of being at least a bit more worried, given a starting point of not really having thought about this very much at all. Has this changed your thinking in any way?

In a follow-up article to this one, I will address some of the counter-arguments to the ideas discussed above and give reasons why AIs are unlikely to kill everyone.

Many books and movies have been made about this age-old story. I fondly remember the movie Bedazzled from 2000, where the protagonist, played by Brendan Fraser, sells his soul to the Devil (played by Liz Hurley) in exchange for seven wishes, all of which are granted literally and that turn out disastrously (they were not what he really wanted!)

No one actually believes that an existential catastrophe will play out exactly like the paperclip maximizer scenario, but it’s an instructive thought experiment.

GPT-2 has 1.5 billion parameters, GPT-3 has 175 billion, and GPT-4 probably has more than 1 trillion, but it has not been officially disclosed. A parameter in such a model is like a particular setting, and the total count of all the parameters roughly determines its capabilities.

By control, I don’t mean that we have no ability to modify or impact the goals or behaviors of AIs (or people), but rather that we don’t have control over how they go about achieving their goals, and that they also have their own goals and ideas as intelligent agents.

Having a will of its own doesn’t necessarily mean that it is conscious or has “free will,” simply that it develops goals independently from what we say or want.

Interestingly, but perhaps not surprisingly, the most prominent figures and commentators in the AI safety/risk space over the past decades have often been philosophers, mathematicians, computer scientists, and people with similar backgrounds. This goes some way to explain the types of issues and risks that have been emphasized and the conclusions that have been reached (like those above.) It will be interesting to see how or if this changes as more people with different backgrounds address AI risk and safety.

This is a wonderful read. I will reread again and probably do a write up for Risk+Progress. Very good summary of the risks and potential solutions for AI.